Balancing innovation and ethics in AI/ML

By Staff Report August 1, 2025 5:31 pm IST

By Staff Report August 1, 2025 5:31 pm IST

Electricity DISCOMs must ensure that consumer data privacy is not compromised even as grid digitalisation (and thus the use of AI/ML) has become inevitable to deliver efficiency, performance, customer experience and regulatory compliance.

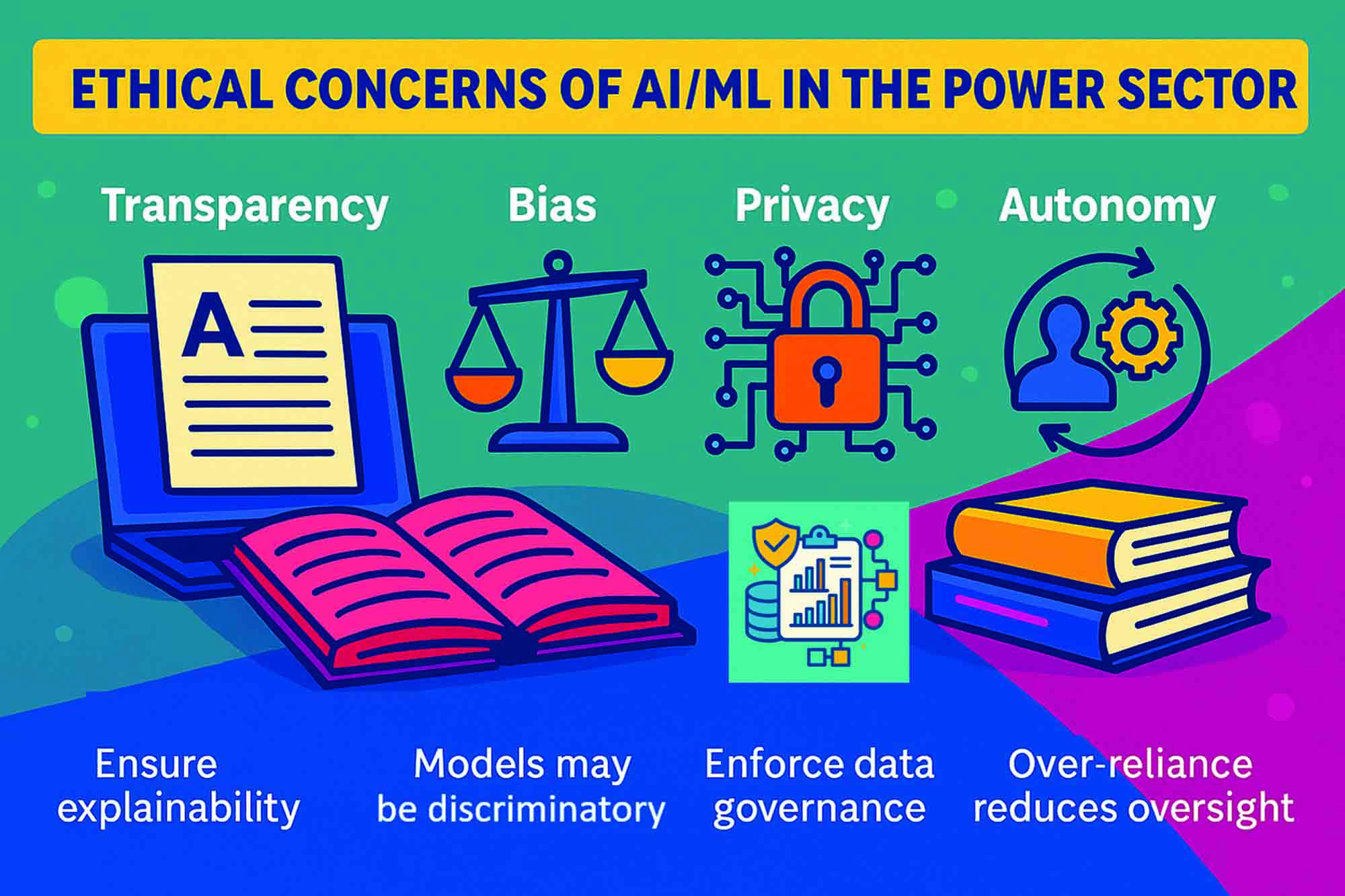

The increasing use of AI/ML applications in the electricity sector has raised ethical concerns regarding energy equity, accountability, transparency, and environmental impact.

Ethical concerns refer to actions that may be technically and legally compliant but ‘may not be the right thing to do’, if they do not meet the principles of fairness, justice and societal good. Such acts can cause reputational damage, loss of employee morale, attrition, and social backlash, and could lead to a loss of public trust, adversely affecting long-term business sustainability.

As an example, AI/ML is often used in applications such as smart metering and smart grids, which collect and analyse vast amounts of sensitive data. Electricity DISCOMs must ensure that consumer data privacy is not compromised even as grid digitalisation (and thus the use of AI/ML) has become inevitable to deliver efficiency, performance, customer experience and regulatory compliance.

Ethical concerns of AI/ML applications

Appreciation on how and where work processes could be compromised will help understand and identify ethical concerns, as explained below:

Data collection: Smart meters collect energy consumption data at frequent intervals, providing insights into the customer energy use behaviour and lifestyle. This data, while valuable for optimising the grid, can lead to customer profiling, illegal surveillance and targeted advertisements for questionable gains. Access to energy consumption patterns could lead to discriminatory practices and even unfair energy pricing.

Data modelling: If the AI models used in Demand Response Systems (DRS) and load balancing applications are trained on non-representative, insufficient, or inaccurate data, it could lead to an unfair distribution of electricity, a lack of transparency in pricing, and discrimination in service delivery. For instance, AI algorithms for load shedding or predictive maintenance might deprioritise low-income or rural areas if the data reflects historical bias in power allocation and investments.

Workforce disruption and job displacement: Automation of tasks through AI/ML will inevitably impact the workforce in the power sector. Jobs related to meter reading, grid inspection, and routine maintenance are particularly vulnerable to automation. While new roles in Data Sciences and AI will emerge, it is an ethical responsibility to manage this transition. This includes retraining and reskilling programs for displaced workers, ensuring a just transition that doesn’t leave them dependent on traditional energy jobs.

Consumer protection and tariff equity: AI can be used to develop dynamic and personalised electricity tariffs. While this can promote energy efficiency, it also carries the risk of creating inequitable pricing structures. Algorithms could charge higher rates to vulnerable consumers with less flexibility. AI models should be designed to ensure ethical pricing that does not exploit consumers. This involves providing clear information on tariff calculations and making energy more affordable for low-income groups.

Data consent: AI-based energy efficiency and demand-side management applications require access to consumers’ consumption data at a granular level for time-series analysis. Utilities should be obligated to obtain consent from consumers to access their data.

Model fixing: Unauthorised access to smart grid application data not only compromises privacy but could also be exploited unethically to train AI/ML models that influence predetermined outcomes.

Environmental impact: AI applications are data-hungry, resource-intensive, and consume huge amounts of power, with adverse environmental implications. Utilities should be obliged to increase the share of renewable energy in data centres and optimise the applications to consume less energy.

Mitigating the ethical concerns of AI/ML

The following safeguards can be considered to uphold the ethical standards of AI/ML applications in the electricity sector:

Bias removal: Ensure that the training data is diverse and representative of the target community. Data collected from various sources must be verified for inaccuracies, missing values and inconsistencies using techniques such as data normalisation, algorithmic audits and bias correction. Data on which AI models are trained must be as diverse and representative of the total population as possible.

Algorithmic audits: Perform regular audit of AI/ML algorithms to detect and remove biases, along with algorithm testing on diverse datasets and evaluating their performance. Utilities should be obligated to use accredited external agencies to ensure this.

Model testing: Test AI/ML models rigorously to identify gaps and fix errors. Use metrics to check model performance and accuracy. Retrain the models on new datasets to fine-tune parameters and act upon user feedback to improve model accuracy.

Data minimisation and anonymisation: Collect only the data that is strictly necessary for the AI models to function. Wherever possible, use anonymised or aggregated data to protect individual identities in smart grid applications

.

Community and stakeholder consultation: Involve local communities, consumer advocacy groups and social scientists in the design and review process. Their input can help identify potential biases and ensure that system objectives align with public values.Managing workforce transition: Proactive reskilling and upskilling will help. Companies will have to provide a safety net for workers in transition to help them learn and adapt.

Monitoring ethical standards of AI/ML applications

It is incumbent upon energy utilities to shoulder their ethical responsibility when working with AI/ML applications, as well as to conform to the ethical standards of transparency, accountability, fairness, and privacy.

Best practices in AI/ML implementation

The following diagram illustrates the best practices in ensuring standards and ethics in AI/ML implementation:

-Diagram-

Additionally, having an effective enterprise risk management program is considered essential for managing AI/ML risks, as it brings transparency to the decision-making process and enables optimisation of risk-reward tradeoffs.

Enterprise risk management in AI/ML

Designing an effective Enterprise Risk Management (ERM) program can play a crucial role in identifying, assessing and mitigating the ethical concerns arising from the use of AI/ML, as with all other risks covered above. A robust ERM program embeds ethical risk identification within strategic, operational, and compliance risk assessments, enabling power utilities to map AI/ML-related risks to stakeholder impacts proactively.

By establishing governance structures, clear accountability, and ethical guidelines for AI/ML deployment, the ERM framework ensures responsible innovation that aligns with regulatory expectations and fosters public trust. It also facilitates the development of risk-informed policies for data handling, fairness audits and explainability standards, helping utilities balance technological advancement with ethical integrity and long-term resilience.

Conclusion

The integration of AI/ML into the power sector applications has revolutionised grid operations, asset management, demand forecasting and renewable energy optimisation. However, these technologies also raise ethical concerns that must be proactively addressed. Many AI/ML models, particularly deep learning algorithms, operate as “black boxes,” raising concerns about transparency, accountability, and trust. AI models trained on historical data may inadvertently perpetuate unethical bias by deprioritising customer service in certain classes or regions, widening the digital divide.

To address these ethical concerns, a multi-pronged approach is required. The deployment of AI/ML tools must adhere to the principles of transparency and accountability. AI models must be trained responsibly with proper data to eliminate biased outputs that create ethical issues, such as inequitable electricity distribution, unfair energy pricing, and the leakage of sensitive information. Fairness must be embedded into AI models to detect and eliminate biases, serving the interests of customers, especially those from weaker sections. Robust data governance frameworks must be established to safeguard privacy and security while training AI models, ensuring compliance with data protection regulations. Implementing a robust ERM framework will also help in proactively identifying and mitigating potential ethical challenges of AI/ML adaptations.

*********************************

Authored by:

K Ramakrishnan, Chief Advisor and Mentor- EnTruist Power (Former ED- NTPC)

Soubhagya Parija, Principal Advisor (Risk Management)- EnTruist Power (Former Chief Risk Officer- FirstEnergy)

Jayant Sinha, Senior Principal Consultant (E&U)- EnTruist Power (Ex. NTPC, Capgemini)

We use cookies to personalize your experience. By continuing to visit this website you agree to our Terms & Conditions, Privacy Policy and Cookie Policy.